Taking Project Loom for a spin

Project Loom is one of many the Java JDK’s incubating project that aim to design and, all going well, deliver new features to Java.

Its objective is to support easy-to-use, high-throughput lightweight concurrency and new programming models on the Java platform, according to the Project’s Home Page.

I was very excited to read about the current state of Project Loom in the State of Loom article which came up just a few days ago! The final design they have settled on is a dream-come-true for Java developers.

It will allow nearly any existing code that currently relies on the good-old Thread class for concurrency to immediately

benefit with almost no changes at all, all the while making one of the hottest features of cooler and trendier languages,

async/await, look like one of those regrettable it-sounded-liked-a-great-idea-at-the-time

features they wish they didn’t have anymore.

It even sounds too good to be true, and whether that’s the case remains to be seen!

Luckily, State of Loom published a link to the Loom EA binaries so anyone can already take it for a spin! And that’s exactly what I did.

Here’s what I found out!

Getting started with Loom

After downloading the Loom’s Linux tar ball and unpacking it (I am using Ubuntu 20), I tried to create a Gradle Project to play around with it, but Gradle is not ready for Loom(1) yet.

But Maven is! After writing a basic POM and a very basic Java class to test virtual Threads, I was up and running.

I used this little bash script to set my Java home and get Maven to build my project:

#!/usr/bin/env bash

export JAVA_HOME=~/programming/experiments/jdk-15

export PATH=$JAVA_HOME/bin:$PATH

java --version

cd loom-maven && mvn package

Here’s the initial POM:

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<properties>

<maven.compiler.source>15</maven.compiler.source>

<maven.compiler.target>15</maven.compiler.target>

</properties>

<groupId>com.athaydes</groupId>

<artifactId>loom-maven</artifactId>

<version>1.0-SNAPSHOT</version>

</project>

And my little Java class:

package loom;

import java.util.concurrent.CountDownLatch;

public class Main {

public static void main(String[] args) {

var latch = new CountDownLatch(10);

for (int i = 0; i < 10; i++) {

Thread.startVirtualThread(() -> {

System.out.println("Hello from Thread " + Thread.currentThread().getName());

latch.countDown();

});

}

try {

latch.await();

System.out.println("All Threads done");

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

The reason for using a latch in this code is so that the print-outs are actually shown! As it turns out, virtual Threads,

as they’re now calling the lightweight Java Threads Loom is introducing, are daemon Threads by default (i.e.

background Threads that do not stop the JVM from shutting down, as normal deamon Threads).

As a side note: java.lang.Thread’s setDaemon(boolean on) method’s new Java docs have this to say about daemon

Threads:

The daemon status of a virtual thread is meaningless and is not changed by this method

(the isDaemon method always returns true).

The above warning is missing in Thread.Builder#daemon(boolean)’s Javadocs, so remember this.

I imported the code in IntelliJ without problems (just had to create a JDK pointing to the Loom’s JDK, of course, and

set the Language Level to X - experimental features), but couldn’t get it to run the code…

fortunately, using mvn package to create a jar, I was able to run the code using plain java:

$ java -cp target/loom-maven-1.0-SNAPSHOT.jar loom.Main

Hello from Thread <unnamed>

Hello from Thread <unnamed>

...

All Threads done

Nice! The code runs! Looks like virtual Threads have no name though. Makes sense, if you’re going to have millions of them, as they say you can, you probably won’t remember them all by name.

1 million virtual Threads… hm… how about we try that!

Starting one million virtual Threads

Here’s a silly program that calculates the sum of the first 1 million natural numbers:

package loom;

public class Main {

public static void main(String[] args) {

long result = 0;

for (int i = 1; i <= 1_000_000; i++) {

result += i;

}

System.out.println("Result: " + result);

}

}

This program runs in 0.1 seconds and consumes 33MB (most of it the initial heap size) according to my system monitor.

But it’s boring! We want it to use all cores of our machine!! Let’s use virtual Threads to solve the problem :)

package loom;

import java.util.concurrent.CountDownLatch;

import java.util.concurrent.atomic.AtomicLong;

public class Main {

public static void main(String[] args) throws InterruptedException {

final var result = new AtomicLong();

final var latch = new CountDownLatch(1_000_000);

for (int i = 1; i <= 1_000_000; i++) {

final var index = i;

Thread.startVirtualThread(() -> {

result.addAndGet(index);

latch.countDown();

});

}

// wait for completion

latch.await();

System.out.println("Result: " + result);

}

}

This example raises a small issue: it can get tricky to await on a million Threads for a result. If you do have to use that many, normally, they are likely to be independent of each other, so you don’t need to merge the results later.

But not in our silly example above, of course. Creating a

List with one million Future objects so that we can wait on all of them would be an easy solution, but that seems

awfully wasteful. That’s why I again used a CountDownLatch to block execution until all Threads we expect to run have

completed their jobs!

As pointed out on Reddit, another way would be to create a single-use

ExecutorService, submit all tasks, then shut it down, which will block until all tasks complete.

This correctly prints the result as well:

Result: 500000500000

Can you think of a way to prove that the sum of the first one million natural numbers is indeed

500_000_500_000? Hint: think about the number sequence both going forward and in reverse.

This example may not be realistic, but it does show that you can, in fact, start up 1 million virtual Threads in a span of just a second or so!

This ran on my machine in 1.98 seconds and consumed 198MB.

Curious to know just how little memory I could give the JVM and still run this example, I set Xmx to smaller and smaller

values until it crashed. It looks like we can get away with a heap as small as 6MB in this case!

$ time java -Xmx6M -cp loom-maven/target/loom-maven-1.0-SNAPSHOT.jar loom.Main

Result: 500000500000

java -Xmx6M -cp loom-maven/target/loom-maven-1.0-SNAPSHOT.jar loom.Main 2.37s user 0.90s system 245% cpu 1.331 total

avg shared (code): 0 KB

avg unshared (data/stack): 0 KB

total (sum): 0 KB

max memory: 45 MB

page faults from disk: 0

other page faults: 6991

You may notice that the reported maximum RAM consumed above was 45MB, much more than the 6MB given to the Java heap. That’s because the JVM itself needs memory for things like the Garbage Collector and management of its resources, which is not counted towards the application heap. This article explains that in detail if you are curious.

Just for fun, I tried the same thing with non-virtual Threads. To my surprise, the program actually ran successfully without an explicit memory limit, but it took 57 seconds and 382MB of RAM! When I limited heap memory to 50MB, the program still ran successfully, but according to my system, it actually took 260MB of memory, which I think is due to the fact that the JVM allocates most of the memory required by Threads off-the-heap.

This little exercise shows us 2 things: one is that creating a large number of virtual Threads is fast and the memory overhead small!

Another, is that not all problems are worth parallelizing, as you are certainly aware of.

A more realistic example: HTTP server and client

One problem where having a million different virtual Threads running at the same time is not unrealistic, is a HTTP server. Because servers tend to be IO bound (i.e. spend most time waiting on disc or network operations), this is one of the most obvious applications where you would want to use asynchronous programming, despite its high costs in terms of the complexity it introduces. After all, you don’t want each server to be able to only handle a few hundred simultaneous connections because each connection is holding on to its own Thread.

But can we use virtual Threads to fix that problem?

To investigate that, I decided to use RawHTTP, a very simple HTTP Java library, for a few reasons:

- I wrote it myself! That helps because I know exactly what the code is doing.

- It uses a Thread-based concurrency model (not async), perfect to test virtual Threads.

- It does not make use of

ThreadLocal, which could cause problems(2) with virtual Threads.

To keep things simple, instead of using databases or making connections to other HTTP servers, I decided to simulate

the server spending time on those things with Thread.sleep. That should be enough to make each connection “costly”

to the server, while allowing us to test that virtual Threads do help keep resources under control.

A Hello World HTTP Server can be implemented with RawHTTP using virtual Threads as follows:

package loom;

import rawhttp.core.HttpVersion;

import rawhttp.core.RawHttpHeaders;

import rawhttp.core.RawHttpResponse;

import rawhttp.core.StatusLine;

import rawhttp.core.body.StringBody;

import rawhttp.core.server.TcpRawHttpServer;

import java.io.IOException;

import java.net.ServerSocket;

import java.util.Optional;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

public class Main {

private static final RawHttpResponse<?> responseOK = new RawHttpResponse<>(

null, null, new StatusLine(HttpVersion.HTTP_1_1, 200, "OK"),

RawHttpHeaders.empty(), null);

public static void main(String[] args) {

var server = new TcpRawHttpServer(new ServerOptions());

server.start((req) -> Optional.of(responseOK.withBody(

new StringBody("hello world", "text/plain"))));

}

}

class ServerOptions implements TcpRawHttpServer.TcpRawHttpServerOptions {

@Override

public ServerSocket getServerSocket() throws IOException {

return new ServerSocket(8080);

}

@Override

public ExecutorService createExecutorService() {

return Executors.newUnboundedVirtualThreadExecutor();

}

}

If you want to follow along, the Maven POM at this point should look like shown in this Gist.

I added the Maven Shade Plugin to make a single runnable jar, so now I can run the server with a simpler command:

$ java -jar loom-maven/target/loom-maven-1.0-SNAPSHOT-shaded.jar

To test it’s working, we can hit it with Curl:

$ curl -v localhost:8080

* Trying 127.0.0.1:8080...

* TCP_NODELAY set

* Connected to localhost (127.0.0.1) port 8080 (#0)

> GET / HTTP/1.1

> Host: localhost:8080

> User-Agent: curl/7.68.0

> Accept: */*

>

* Mark bundle as not supporting multiuse

< HTTP/1.1 200 OK

< Content-Type: text/plain

< Content-Length: 11

< Date: Sun, 17 May 2020 14:49:11 GMT

< Server: RawHTTP

<

* Connection #0 to host localhost left intact

hello world

All good so far. Notice that we’re using virtual Threads already! All we had to do was provided an ExecutorService

that uses Virtual Threads instead of “normal” Threads:

@Override

public ExecutorService createExecutorService() {

return Executors.newUnboundedVirtualThreadExecutor();

}

With “normal” Threads, you would never use an unbounded thread pool. But with Virtual Threads, that’s fine! In fact,

you do NOT even need to pool the Threads. This example just shows how easy it is to integrate with existing frameworks

that rely on ExecutorService.

I also wrote a client to test the server so that we can check how fast we can create requests. The client simply starts a number of threads simultaneously, then in each thread, runs 10 requests (which should take a little over 10 seconds to run, as the server sleeps for 1 second before returning any responses). All responses are checked for correctness, so that we’re sure that the server is handling the load graciously:

package loom;

import rawhttp.core.RawHttp;

import rawhttp.core.RawHttpRequest;

import rawhttp.core.RawHttpResponse;

import rawhttp.core.client.TcpRawHttpClient;

import java.io.IOException;

import java.nio.charset.StandardCharsets;

import java.util.concurrent.CountDownLatch;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

public class Client {

private static RawHttpRequest createRequest() throws IOException {

return new RawHttp().parseRequest("GET http://localhost:8080/\nAccept: */*").eagerly();

}

static void run(int threads) throws IOException, InterruptedException {

System.err.println("Starting client with " + threads + " Threads");

var latch = new CountDownLatch(threads);

var request = createRequest();

for (int i = 1; i <= threads; i++) {

if (i % 100 == 0) System.err.println("Starting client Thread " + i);

final var index = i;

Thread.startVirtualThread(() -> {

var start = System.nanoTime();

Thread.startVirtualThread(() -> System.out.println(start));

try (var client = new TcpRawHttpClient(new ClientOptions())) {

for (int n = 0; n < 10; n++) {

var response = client.send(request);

verifyResponse(index, response);

}

} catch (IOException e) {

e.printStackTrace();

} finally {

latch.countDown();

}

});

}

latch.await();

System.err.println("All " + threads + " client Threads done");

}

private static void verifyResponse(int index, RawHttpResponse<?> response) {

if (response.getStatusCode() != 200) {

System.err.println(index + ": Wrong status code - " + response);

} else {

response.getBody().ifPresentOrElse(body -> {

try {

var text = body.decodeBodyToString(StandardCharsets.UTF_8);

if (!text.equals("hello world")) {

System.err.println(index + ": Wrong body - '" + text + "'");

}

} catch (IOException e) {

e.printStackTrace();

}

}, () -> System.err.println(index + ": Missing body"));

}

}

}

class ClientOptions extends TcpRawHttpClient.DefaultOptions {

@Override

public ExecutorService getExecutorService() {

return Executors.newUnboundedVirtualThreadExecutor();

}

}

The full code for this experiment can be found on this Gist.

Here’s what happened when I started the client with 1_000 threads:

$ time java -jar loom-maven/target/loom-maven-1.0-SNAPSHOT-shaded.jar client 1000 > times.csv

Hit any key to continue (time to prepare your profiling tools)...

Starting client with 1000 Threads

Starting client Thread 100

Starting client Thread 200

Starting client Thread 300

Starting client Thread 400

Starting client Thread 500

Starting client Thread 600

Starting client Thread 700

Starting client Thread 800

Starting client Thread 900

Starting client Thread 1000

All 1000 client Threads done

java -jar loom-maven/target/loom-maven-1.0-SNAPSHOT-shaded.jar client 1000

> 14.84s user 3.56s system 50% cpu 36.329 total

avg shared (code): 0 KB

avg unshared (data/stack): 0 KB

total (sum): 0 KB

max memory: 173 MB

page faults from disk: 0

other page faults: 42862

All the 1_000 thread started nearly simultaneously, from what I could see in the output, and the total time was almost 15 seconds.

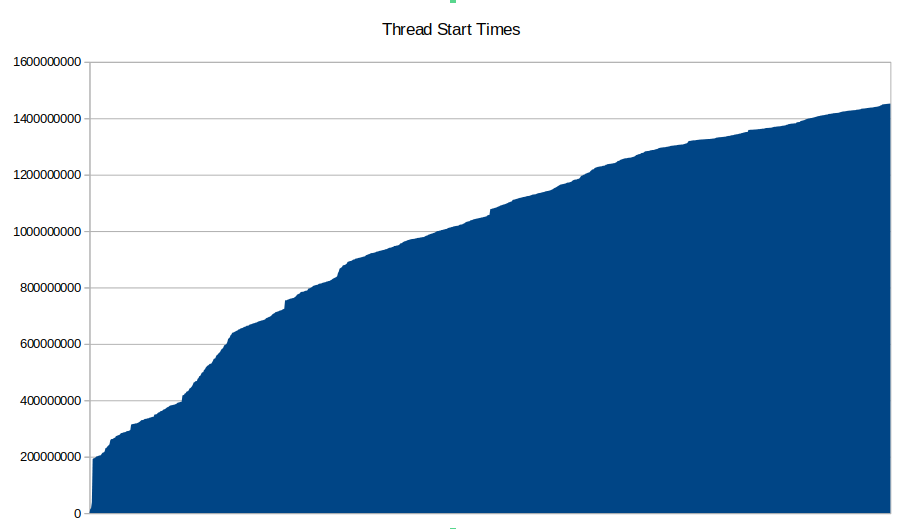

I sent the exact times the Threads started to a CSV file so that I could visualize that:

Time is in the y-axis (which shows the delta from the first start time in nanoseconds, hence it starts from 0), and I sorted it to make the data a little cleaner. After a couple of slow starters, you can see that the JVM picks up the pace very quickly and starts executing a new virtual Thread approximately every 1.265 milliseconds.

The last (1_000th) Thread starts 1.452 seconds after the first.

The reason for this total time may be related to how the OS manages sockets. I checked with

netstatthat my system was able to open several thousand local sockets, and verified withsystemctlthat that was the case. But these things can be difficult to know for sure… the main point of this article is that Java, with Project Loom, can have many thousand virtual Threads consuming very few resources, so hopefully doing it by using actual HTTP connections is still useful information to have.

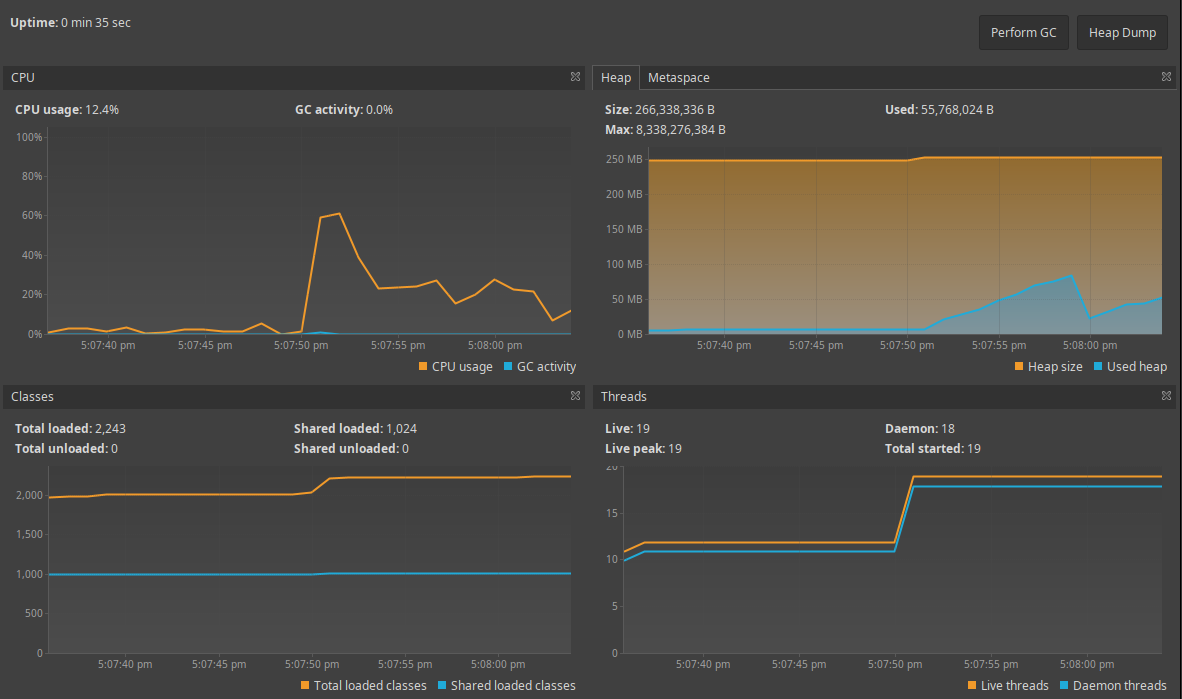

The output above shows that the maximum RAM consumed by the client was 173MB, but we can check how much heap was being actually consumed (thanks to the new and improved VisualVM, which is still my favourite free tool for doing this):

Very little. It never got close to 100MB, and after a GC run, it went right down to 28MB.

On the server, the situation was quite comfortable as well. I made the server print the number of requests being handled at any given time every 1 second:

$ time java -jar loom-maven/target/loom-maven-1.0-SNAPSHOT-shaded.jar

Live threads: 0

Live threads: 0

Live threads: 0

Live threads: 0

Live threads: 108

Live threads: 975

Live threads: 376

Live threads: 1000

Live threads: 997

Live threads: 963

Live threads: 999

Live threads: 622

Live threads: 771

Live threads: 1000

Live threads: 1000

Live threads: 978

Live threads: 503

Live threads: 354

Live threads: 0

Live threads: 0

Live threads: 0

Live threads: 0

^Cjava -jar loom-maven/target/loom-maven-1.0-SNAPSHOT-shaded.jar 16.81s user 3.22s system 16% cpu 1:59.21 total

avg shared (code): 0 KB

avg unshared (data/stack): 0 KB

total (sum): 0 KB

max memory: 227 MB

page faults from disk: 3

other page faults: 58533

Roughly as expected, in a time period of around 14 seconds, the server was handling at least a few dozen Threads, and for almost 10 seconds, it was handling close to 1_000.

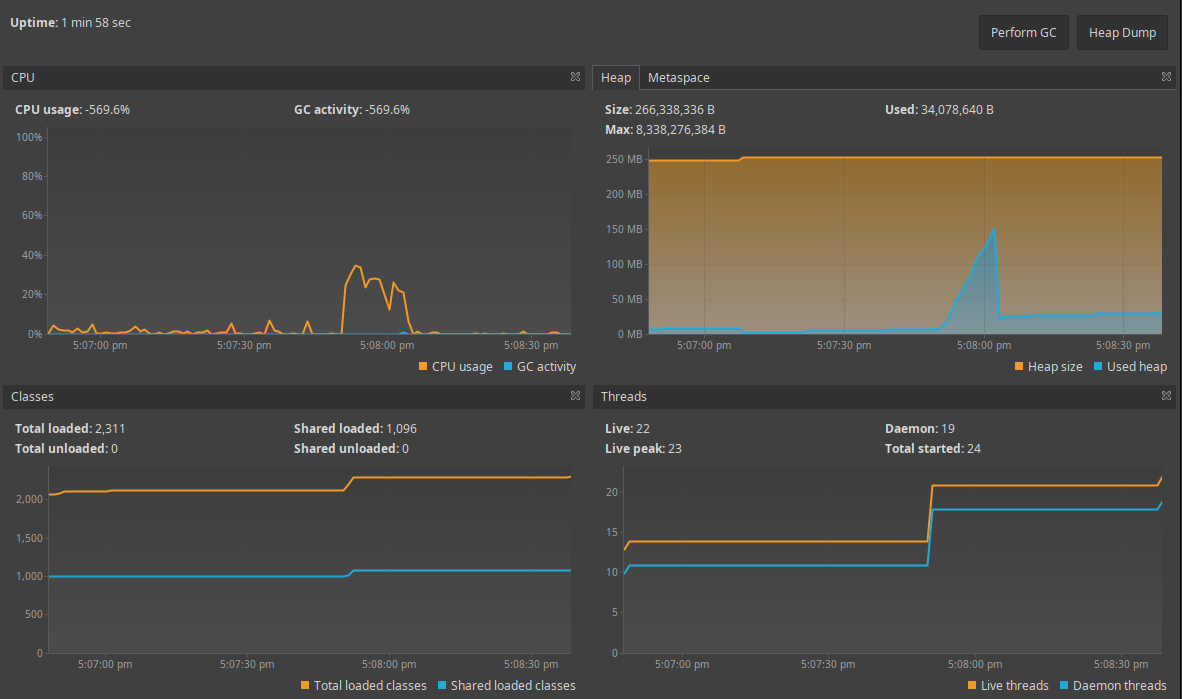

Its memory consumption was very reasonable (the JVM didn’t seem to have even bothered to GC while requests were being handled):

Notice also how the reported number of Threads is very low: maximum of 24. That’s probably because virtual Threads are not taken into consideration, which is expected as keeping track of potentially millions of virtual Threads would not be easy or even worthwhile.

Conclusion

This experiment shows that Virtual Threads are already performing quite well, despite it still being in early stages and most optimizations that were hinted at in the “State Of Loom” article still being ahead of us.

Most importantly, using them is extremely easy, even in existing code bases.

Being able to start Threads so easily and without concerns about having too many is quite amazing and will change how Java developers (and programmers using other JVM languages) write code. Even existing libraries and frameworks will benefit immensely from day one with minimal changes. All the complex machinery built around asynchronous IO and reactive frameworks that tried to solve the problem caused by heavyweight Threads might be relegated to the history books as writing blocking code with Virtual Threads that perform the same or better will become a reality.

There may still be some corner cases where these asynchronous frameworks will have a place(3), but for the majority of Java programmers and libraries, using the simpler, more familiar synchronous, blocking code, will definitely become the right choice: it’s much simpler and seems to be as performant.

Footnotes:

1.

Gradle error when trying to build with JDK 15 EA:

java.lang.NoClassDefFoundError: Could not initialize class org.codehaus.groovy.vmplugin.v7.Java7 at org.codehaus.groovy.vmplugin.VMPluginFactory.[clinit](VMPluginFactory.java:43) at org.codehaus.groovy.reflection.GroovyClassValueFactory.[clinit](GroovyClassValueFactory.java:35) ...

2.

3.